Last night, DeepSeek launched its own o1-like model – DeepSeek R1-Lite. Moreover, it was launched and made available immediately. All users can experience it on the official website, with 50 trial opportunities per day. Experience URL: https://chat.deepseek.com

According to the statistics of Woyin AI, currently, 5 domestic AIs have launched functions similar to “slow thinking”, namely Kimi Exploration Edition, TianGong AI Advanced Search, 360AI Search “Slow Thinking Mode”, Zhipu AI Search Agent, and DeepSeek “Deep Thinking”. However, the functions of the other several are actually more like the Pro function of Perplexity. It would be more accurate to define them as advanced search or in-depth search. They possess the “slow thinking” ability in terms of search, rather than being o1-like models in reasoning and logic.

Question 1: How many 1s are there in 010111010101101101? If this question is not answered with the Cot (Chain of Thought) thinking and the LLM (Large Language Model) is asked to calculate it with its regular model capabilities, based on the Attention mechanism, the AI is very likely to count wrongly, such as Kimi’s answer. Then, let’s take a look at the answer of the R1 model.

Well, this string of numbers is 010111010101101101.

I need to count how many 1s are in it. First of all, I have to look at them one by one. It may be a bit troublesome, but it shouldn’t be difficult. Let me start counting from left to right. The first number is 0, not 1, so I skip it. The second is 1, I count one. The third is 0, skip it. The fourth is 1, now there are two. The fifth is 1, three. The sixth is 1, four. The seventh is 0, skip it. The eighth is 1, five. The ninth is 0, skip it. The tenth is 1, six. The eleventh is 0, skip it. The twelfth is 1, seven. The thirteenth is 0, skip it. The fourteenth is 1, eight. The fifteenth is 1, nine. The sixteenth is 0, skip it. The seventeenth is 1, ten. The last one is also 1, eleven. Wait a minute, I’ll confirm again. Maybe I counted wrongly just now. I’ll count from the beginning one more time.The first digit: 0, not 1.

The second digit: 1, one. The third digit: 0, not 1. The fourth digit: 1, two. The fifth digit: 1, three. The sixth digit: 1, four. The seventh digit: 0, not 1. The eighth digit: 1, five. The ninth digit: 0, not 1. The tenth digit: 1, six. The eleventh digit: 0, not 1. The twelfth digit: 1, seven. The thirteenth digit: 0, not 1. The fourteenth digit: 1, eight. The fifteenth digit: 1, nine. The sixteenth digit: 0, not 1. The seventeenth digit: 1, ten. The eighteenth digit: 1, eleven. It seems the same as before. There are a total of eleven 1s.However, I’ll check it one more time to see if there are any omissions or overcounts.

0 1 0 1 1 1 0 1 0 1 0 1 1 0 1 1 0 1

From left to right:

1. 0

2. 1 (1)

3. 0

4. 1 (2)

5. 1 (3)

6. 1 (4)

7. 0

8. 1 (5)

9. 0

10. 1 (6)

11. 0

12. 1 (7)

13. 1 (8)

14. 0

15. 1 (9)

16. 1 (10)

17. 0

It seems there are indeed eleven 1s. The R1 model used three methods to count. The first is mental counting (from left to right), which is also a common calculation method for us humans, and the result is 11 1s.

However, R1 felt it might make a counting error, so it conducted two more checks, and it’s quite endearing.

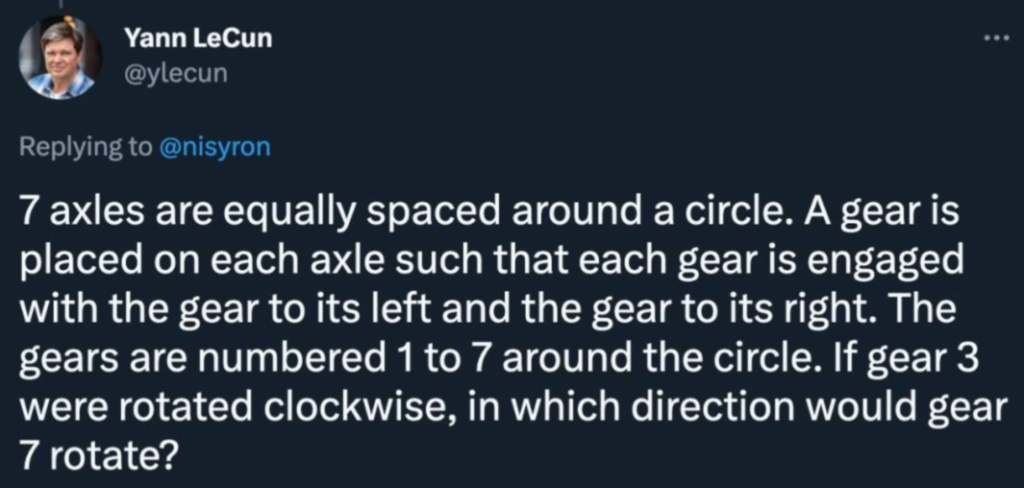

Question 2: From June 5, 2022, to November 12, 2024, how many days are there in total? This was a question in the Wenyuan AI group a few days ago, where everyone tested various models, each with different answers, and some were quite far off. Let’s ask the R1 model. Similarly, it solves the problem through multiple logical approaches and cross-verification to confirm the accuracy of the answer. Impressive, finally an AI got it right. Question 3: Suppose a laboratory creates a substance with anti-gravity that can levitate. After a test white mouse consumes this substance, it flies; after an eagle eats this cat, the cat also flies. Why does the eagle fly? This was a classic question online that would stump o1, with step-by-step questioning leading o1 to mistakenly conclude that ‘the eagle flies because it indirectly consumed the anti-gravity substance.’ R1, however, clearly knows the common knowledge that ‘owls can fly naturally,’ and the ‘anti-gravity substance’ only enhances the owl’s flying ability. Question 4: 7 axles are equally spaced around a circle. A gear is placed on each axle such that each gear is engaged with the gear to its left and the gear to its right. The gears are numbered 1 to 7 around the circle. If gear 3 were rotated clockwise, in which direction would gear 7 rotate? The ‘7 gears problem’ is a classic mechanical transmission problem designed by Yann LeCun, Chief AI Scientist at Meta, to test and evaluate a robot’s logical reasoning and comprehension abilities. The R1 model answered correctly once again, with perfect logic and reasoning.Question 5: Since everyone in prison is a criminal, why don’t the police go to prison to catch bad guys? This is a brain teaser from the. If one is not careful, the answer from an LLM may go astray. I give full marks to the logical answer of the R1 model.

Question 6: Xiaohong has 3 brothers and 3 sisters. So how many sisters does Xiaohong’s brother have? The result is correct, and the reasoning process is very strong. At this point, some people may object: These questions are very simple. I can also answer them correctly. Well, if you think it’s simple, let’s ask other AIs. Does the logic of the sentence ‘Xiaohong’s brothers are Xiaohong’s brothers, not sisters. So, Xiaohong’s brothers have 3 sisters.’ hold? Full of black question marks… Question 7: Reverse all the letters of this string of characters: WoshiWoYinAI. Reversing the characters doesn’t seem to work, and it always misunderstands what I mean. Question 8: A company was burglarized, and A, B, C, and D were suspected and detained. The detection result shows that the criminal is one of them. A said: “It was C who stole.” B said: “I didn’t steal.” C said: “I didn’t steal either.” D said: “If B didn’t steal, then it was me who stole.” It has been found that only one person told a lie. From the above conditions, who can be determined to be the thief? The reasoning is correct. D is the criminal. Friends who often read my articles must know that I have asked this question to many AIs, and few of them answered correctly. Question 9: How many days are there between Zhen Huan’s and Xue Baochai’s birthdays? I don’t know. It should be that the R1 model doesn’t know the birthdays of these two people in its training library, and it won’t use search to answer this question either. Question 10: Why doesn’t she love me? Alright, you don’t need to answer. I know… Pause you manually. Finally, to make a summary. The test cases include problems such as counting, calculation, reasoning, understanding, search, and abstraction. The R1 model can basically answer them correctly. Even if there are wrong answers, it can still “justify” itself and has its own set of thinking logic. Regardless of the accuracy rate, I would like to give DeepSeek a huge thumbs up for the practice of exposing the thought chain of the R1 model. If the answer of the LLM is incorrect, because there is a clear display of the thinking process, I can know where it went wrong, and then I can design prompt words or workflows specifically to optimize and improve the performance of the model. For other AIs, you can only silently accept the wrong results, unable to verify or reproduce. DeepSeek has really set a great precedent in exposing the thought chain. Although there are still some problems with the R1 model on the web side, such as being not good at searching, pretending to think for a long time for simple questions, and crashing on the last question… But after all, this is just a preview version with approximately 16B parameters.The official version of DeepSeek boasts 200 billion parameters, which are currently being optimized and await release.

Crucially, upon the launch of the official R1 model, it will be fully open-sourced! Excellent! Excellent! Excellent! DeepSeek is truly impressive!